How can you prevent insider threats when none of your insiders are actually “inside”?

Chris Smith

Security teams can be so focused on blocking cyberattacks from outside that they turn a blind eye to potential threats within their own organizations.

In fact, 34% of all breaches are caused by insider threats.

Insider threats are uniquely difficult to defend against because insiders inherently require an elevated level of trust and access to get their jobs done. For example, system administrators and other IT professionals may have a legitimate need to access sensitive systems and data. But, can that trust is used as a cover?

Breaches caused by malicious hackers took even longer to identify and contain

Insider attacks remained undetected for an average of 207 days in 2019, with a mean time to contain 73 days. In 2019, breaches caused by malicious cybercriminals took even longer to identify and contain—314 days—with an average cost of more than $1.6 million.

Despite the challenges, you can effectively defend against insider threats. In fact, as I’ll explore in this blog, many of the most infamous insider attacks could have been prevented with the right combination of policies, training, systems, and oversight.

Are you prepared for an insider attack? This is how you get started:

Cybersecurity Incident Response Template

What are insider threats?

Insider threats take many forms. Some are malicious actors looking for financial gain. Others are simply careless or unsuspecting employees who click an email link, only to unleash a torrent of malware. Insider attacks may be performed by people unwittingly lured into committing bad behavior.

The Verizon Data Breach Investigations Report (DBIR) explains insider threats this way: “An insider threat can be defined as what happens when someone close to an organization, with authorized access, misuses that access to negatively impact the organization’s critical information or systems.”

Insiders include consultants, third-party contractors, vendors …

By extension, “insiders” aren’t exclusively people who work for your organization directly. Insiders include consultants, third-party contractors, vendors, and anyone who has legitimate access to some of your resources.

The Verizon Insider Threat Report defines five categories of actors behind insider threats:

- The Careless Worker: An employee/partner who performs inappropriate actions that aren’t intentionally malicious. They’re often looking for ways to get their jobs done, but in the process misuse assets, don’t follow acceptable use policies, and install unauthorized or dubiously sourced applications.

- The Feckless Third Party: A partner who compromises security through negligence, misuse, or malicious access to or use of an asset. Sometimes it’s intentional and malicious; sometimes it’s just due to carelessness. For example, a system administrator might misconfigure a server or database, making it open to the public rather than private and access-controlled, inadvertently exposing sensitive information.

- The Insider Agent: A compromised insider either recruited, bribed, or solicited by a third party to exfiltrate information and data. People under financial stress are prime targets, as are conscientious objectors who disagree with the corporate mission.

- The Disgruntled Employee: A jilted or maligned employee who is motivated to bring down an organization from the inside by disrupting the business and destroying or altering data.

- The Malicious Insider: A person with legitimate privileged access to corporate assets, looking to exploit it for personal gain, often by stealing and repurposing the information.

Insider threat examples: There are plenty of examples of each type of inside actor, from conspirators (American Superconductor) to malicious insiders looking for financial gain (Otto), to conscientious objectors (Edward Snowden), to careless or unwitting actors.

I’ll delve into those case studies shortly, but first, let’s talk about the broad impact of insider attacks.

Impact of insider attacks

Columbia University researchers surveyed the most common types of insider threat activities. The list ranges from seemingly innocuous actions taken by individuals to intentionally illegal activities:

- Unsanctioned removal, copying, transfer, or other forms of data exfiltration

- Misusing organizational resources for non-business related or unauthorized activities

- Data tampering, such as unsanctioned changes to data

- Deletion or destruction of sensitive assets

- Downloading information from dubious sources

- Using pirated software that might contain malware or other malicious code

- Network eavesdropping and packet sniffing

- Spoofing and illegally impersonating other people

- Devising or executing social engineering attacks

- Purposefully installing malicious software

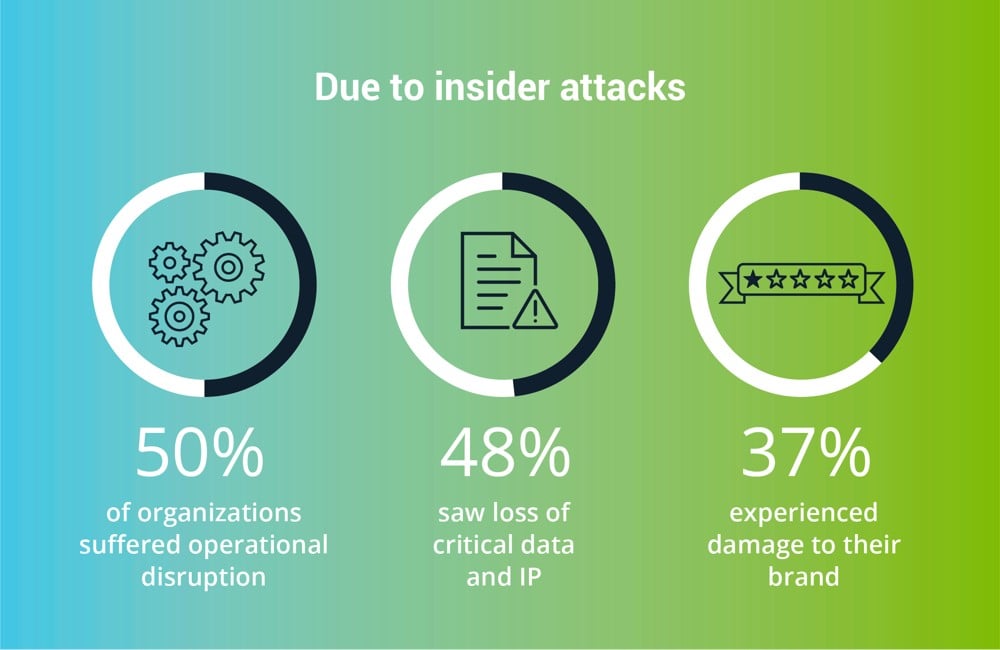

Whether the damage is caused intentionally or accidentally, the consequences of insider attacks are very real.

Other impacts include loss of revenue, loss of competitive edge, increased legal liabilities, and financial fallout.

More than half of IT security professionals say their organizations have experienced a phishing attack. Twelve percent have experienced credential theft and 8% man-in-the-middle-attacks. This is an indication of cybercriminals looking for ways into an organization. In some cases, once a cybercriminal has compromised an account, they can use that information to compromise an individual, finding someone susceptible to being turned, morphing an outside attack into an insider attack.

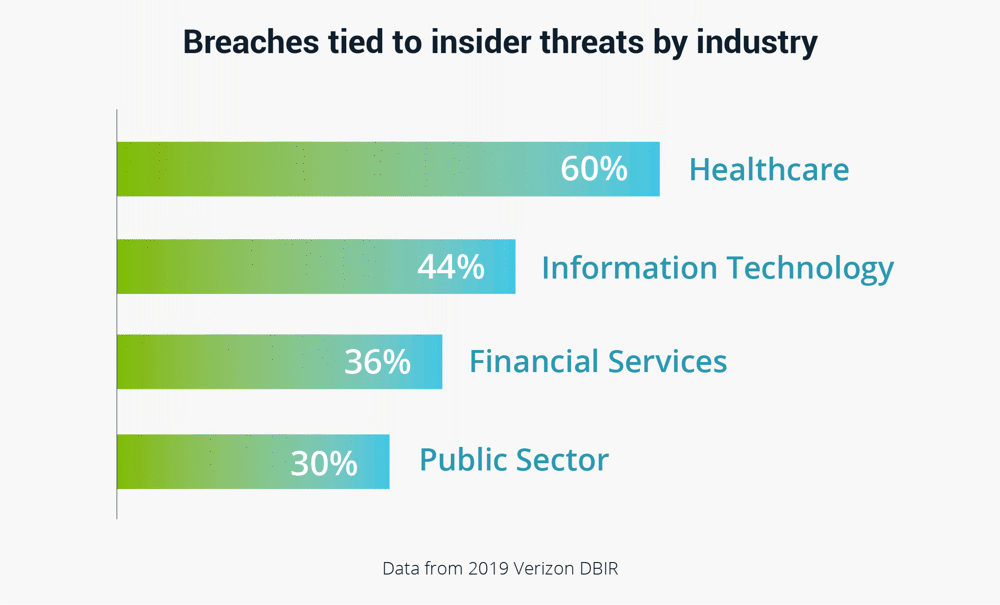

Some industries are prone to insider attacks. According to one widely cited study, nearly 60% of healthcare breaches are tied to insider actions, with stolen credentials, privilege abuse, and phishing attacks topping the list of tactics. Forty-four percent of technology company breaches were due to inside actors.

Preventing insider attacks is getting more difficult

The attack surface has been evolving, making it increasingly difficult to detect and prevent insider attacks. The prevalence of BYOD, the proliferation of SaaS tools and applications, and the migration of data to the cloud have changed the nature of the corporate perimeter. The variety, breadth, and dispersed nature of access points make it harder for you to control the security environment and give attackers the upper hand in hiding their tracks.

These changes have cybersecurity experts and IT departments concerned about users accessing systems outside the corporate perimeter leading to an increased likelihood of data leakage.

It’s not only that there are more devices used to access the corporate network; it’s that so many of the phones and laptops are unsecured, making it harder for you to detect rogue devices within the forest of benign ones.

On average, nearly a quarter of all employees are privileged users. Privileged users have access to a wider array of sensitive systems and data than standard users. Some privileged users may have a legitimate need for that increased access. But not everyone does. When so many insiders have elevated privileges, it’s hard to differentiate between legitimate and aberrant behavior.

These days, during the COVID-19 pandemic, there are many more “insiders” working outside of an organization. People working remotely expect and need the same access to systems that they have while in the office. Yet, IT teams have less visibility and control, which increases the risk of insider threats.

When proper off-boarding protocols are forgotten, the gaps give former employees opportunities to exact revenge

In some organizations, employees are being let go. When this happens quickly, proper off-boarding protocols and processes may be forgotten. Privileged accounts may remain enabled, employees may retain company-issued laptops, and passwords may not be changed or disabled as they should. Gaps like these give former employees the opportunity to steal IP, plant malware, and exact revenge.

As all of these risk factors increase, insiders (and the criminals who stalk them) have become more sophisticated in their use of technology, their ability to cover their tracks, and to navigate corporate networks surreptitiously.

That said, it’s possible to detect insider threats before they cause damage. First, let’s explore some high-profile insider threats from the past few years. Then, I’ll cover how these types of breaches could have been discovered and possibly prevented.

Unpacking real-world insider threat case studies

Conscientious Objector: Edward Snowden & NSA

Edward Snowden is perhaps one of the best-known examples of an insider threat. He was a system administrator for the United States government’s National Security Agency (NSA) through defense contractor Booz Allen Hamilton. He stole and shared millions of classified documents with the press, which were subsequently made public. His goal was to reveal the scope of the U.S. government’s intelligence apparatus, and in doing so to both damages it and affect change. He was not motivated by fame or greed; he wanted to change the way the intelligence agencies operated.

Because he was a system administrator – a privileged user and the ultimate insider – he had wide and broad access to the NSA’s most classified systems and their data and was able to stealthily obtain and distribute enormous amounts of highly sensitive data. By the time he was discovered, he was able to flee the United States, and eventually found sanctuary in Russia.

Economic Espionage: Greg Chung, Rockwell & Boeing, China

Over the course of 30 years, Greg Chung stole $2 billion worth of Boeing trade secrets related to the U.S. Space Shuttle and gave them to China. Originally from China, he became a naturalized US citizen in the mid-1970s and started working at aerospace company Rockwell soon after. His job as a stress analyst with high-level security clearance granted him access to classified files. Over time, he stole 225,000 documents related to the Space Shuttle boosters and guidance systems and the C-17 Globemaster troop transport used by the U.S. Air Force. He was tried for economic espionage and sentenced to more than 15 years in prison.

His high-level security clearance allowed him to access highly sensitive and classified documents. In reality, he should not have been able to access those documents, nor should he have been able to smuggle them out of the Rockwell and Boeing corporate offices.

Corporate Espionage: Dan Karabasevic, American Superconductor, Sinovel

Dan Karabasevic, an engineer for the wind turbine company American Superconductor, was recruited by their chief rival, Chinese company Sinovel, to steal critical intellectual property.

The software he stole and sent to Sinovel was used to regulate the flow of electricity between wind turbines and the electric grid. The theft, and Sinovel’s subsequent refusal to pay for past and pending shipments, crushed American Superconductor, resulting in the stock price falling by half, losing more than $1 billion in value. To survive, the company ended up laying off more than 700 employees.

At his trial, his lawyer explained how Karabsevic’s life was in shambles when he was wooed by Sinovel. According to published reports, his lawyer said, “his client’s actions stemmed from ‘frustration’ about a failed marriage, which had been strained by his trips abroad for work as a department manager involved in software development, followed by a demotion to the customer service department last year … an attorney for American Superconductor said there is evidence that Sinovel wooed Karabasevic by offering him an apartment, a five-year contract at twice his current pay, and ‘all the human contact’ he wanted, ‘in particular, female co-workers.’”

The company had extensive safeguards in place to mitigate the risk of intellectual property theft directly by Sinovel. But it didn’t count on Sinovel gaining the services of an insider.

Corporate Espionage: Anthony Levandowski, Uber, Google

Anthony Levandowski stole trade secrets from Google when he was an engineer involved with their self-driving car project, and used the proprietary information when he founded Otto, a self-driving truck startup that was eventually acquired by Uber. He secretly downloaded around 14,000 documents, which were subsequently used to jump-start the startup he founded.

He leveraged his insider status with Google for personal gain, stealing information that would accelerate his next venture.

Other examples of insider threats include:

- Facebook: A security engineer abused his access to stalk women and was subsequently fired.

- Coca-Cola: A malicious insider stole a hard drive containing personal information about 8,000 employees.

- SunTrust Bank: An insider stole personal information including bank account numbers for 1.5 million bank clients, purportedly to distribute it to a criminal organization.

How to detect signs of insider threat to your privileged accounts

Some privileged user behaviors leave data exhaust.

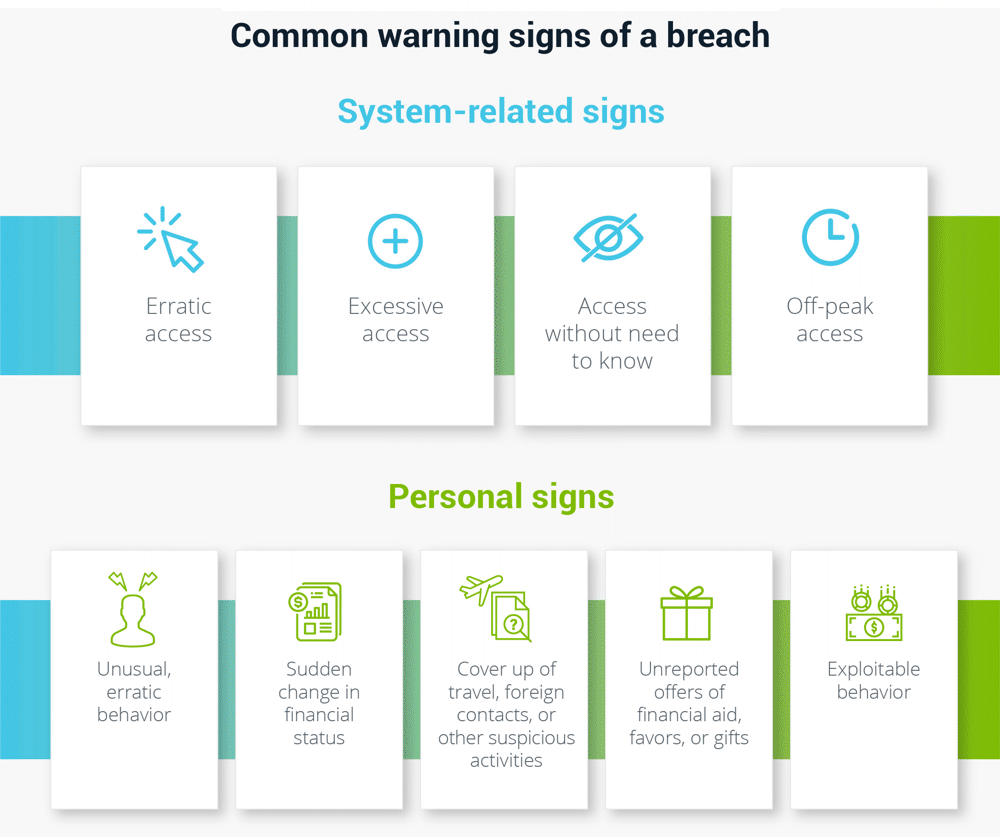

Common warning signs of an insider breach include:

- Erratic access: Sudden increase in privileged account use or attempts to access privileged accounts which they normally would not

- Excessive access: High number of privileged accounts accessed in a burst of time

- No need to know: Attempts or successful access to systems and data without a valid “need-to-know”

- Off-peak access: Accounts are only accessed at unusual times of the day or from atypical locations. Note that especially for remote workers, work may happen outside of “traditional” working hours. Track what is “typical” for your organization so you can clearly see when unexpected changes occur.

You can also look for other warning signs in an individual’s personal conduct. Any single warning sign may be explained; it’s the pattern of behavior that indicates a potential threat.

- Unusual, erratic behavior, such as disgruntled attitude, or a change in normal working hours

- Change in financial status, such as sudden, unexplained affluence or extreme debt

- Attempts to cover up travel, interest in foreign contacts, or other suspicious activities

- Unreported, or undocumented, offers of financial aid, favors, gifts by a foreign national

- Exploitable behavior which could be used against someone, such as past criminal activity, gambling, substance abuse, sexual deviance, problems at work or at home

Tools such as Privileged Behavior Analytics (PBA) can remove some of the guesswork and flag unusual privileged user behavior. This often starts by creating a detailed, baseline snapshot of each user’s typical privileged account activity, and organization-wide norms. Then, you can use real-time behavior analytics and pattern recognition algorithms to spot and score potential threats, and prioritize issues based on their threat scores.

Establish a map view of privileged users and their associated accounts

To respond quickly, establish a map view of privileged users and their associated accounts, and set alerts and alarms for when established thresholds are crossed. Finally, fine-tune the criteria as you learn more about each user, to prevent false-positive alerts, and also to ensure suspicious behavior does not fall through the cracks.

Cyber incidents are growing and so are the requirements of cyber insurance providers

Who is more likely to be (or become) an insider threat?

One of the challenges for an IT security pro is to discern between intentionally malicious insider threats and those who are coaxed into becoming an insider threat. Understanding an actor’s motivations can help you develop an appropriate mitigation and containment strategy.

Insider threats can come from anywhere, and there are many reasons why someone, willingly or unwillingly, will attempt to breach corporate security protocols.

Some of the more benign reasons include:

- Boredom

- Curiosity

- Working around existing security controls to make it easier to do a task

But not all insiders have good intentions. Malicious motivations include:

- Revenge

- Organizational conflict

- Anger / holding a grudge

- Financial gain (using access and stolen data to make more money or pay off debts)

- Ideology / personal ethics

- Nationalism / patriotism

- Personal or family problems

You can reduce the potential for insider threats before they occur

It might have been possible to mitigate the attacks in the case studies and examples above by implementing monitoring tools to track who accessed which files, and to alert administrators to unusual activity. Implementing and enforcing least privilege access also may have limited the damage.

As part of your cybersecurity program, you should monitor network traffic for unusual activity, such as off-hours access, remote connections, and other outbound activities. Maintain baseline system images and trusted process lists, and regularly monitor systems against these images to identify potentially compromised systems. Search for telltale signs of compromise by temporarily blocking outbound internet traffic and tracking data outflows.

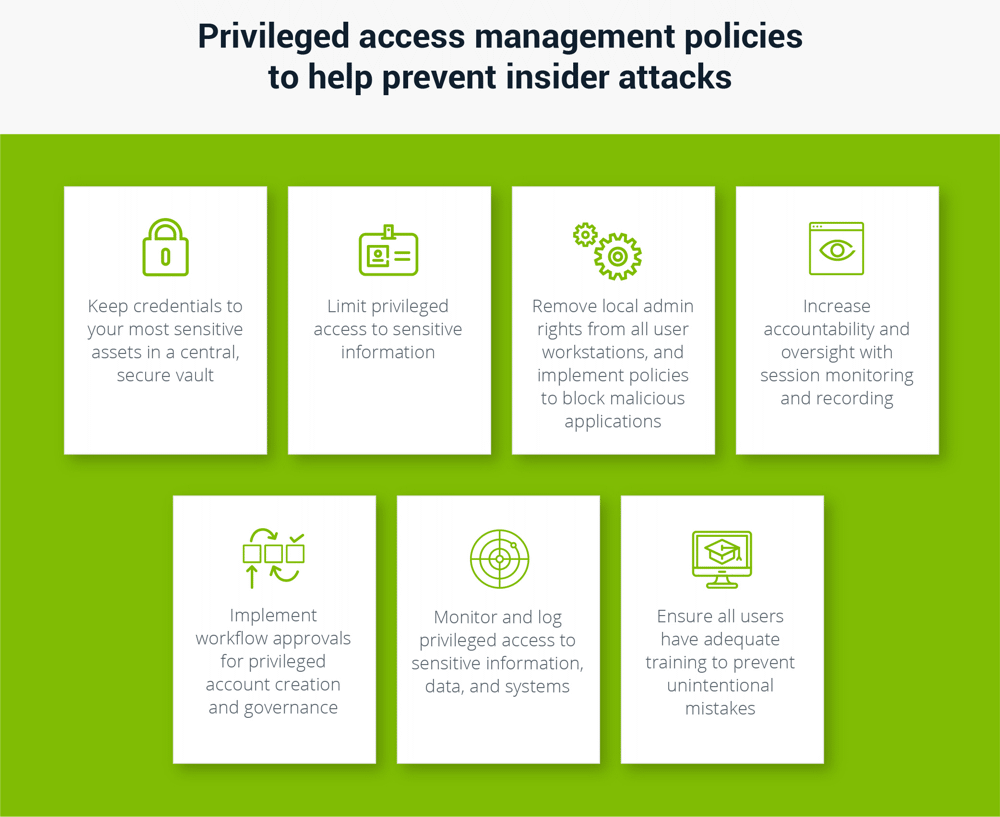

Apply consistent Privileged Access Management (PAM) policies for all workers, whether they’re remote, contractors, or work for a third-party vendor.

- Keep credentials to your most sensitive assets (sensitive applications, databases, root accounts, other critical systems) in a central, secure vault. Allow admins to access it from anywhere, but set temporary time limits where it makes sense, and always track when credentials and secrets are checked out.

- Limit privileged access to sensitive information, such as customer data, personally identifiable information, trade secrets, intellectual property, and sensitive financial data. Employ least privilege policies and tools to provide workers with only the access they need. Remove local admin rights from all user workstations, and implement allowing, restricting, and denying policies to block malicious applications.

- Increase accountability and oversight with session monitoring and recording. Implement workflow approvals for privileged account creation and governance. Monitor and log privileged access to sensitive information, data, and systems.

- Ensure all users have adequate training to prevent unintentional mistakes that allow for a cyberattack.

What to do if an insider attack occurs

Your goal is to respond to insider threats within minutes, not months.

When you suspect a user, account or system has been compromised, remove, prevent or disable privileged access to activities that may be inappropriate, malicious, or might pose an organizational risk.

If confirmed, disable compromised user accounts, remove malicious files and rebuild affected systems.

When a breach is discovered, conduct a forensic analysis to determine how it happened, and prevent it from happening again.

Check out Delinea’s Incident Response Plan Template for more recommendations.

In summary

I hope you found these case studies and examples helpful. Insider threats are a real and pervasive challenge. They’re difficult to prevent, hard to discover, and interminable to clean up. But through a combination of rigorous policies and smartly applied technologies, you can reduce the risk of insider threats and contain the damage if an insider attack occurs.

Delinea helps you monitor unusual behavior with Privileged Behavior Analytics, limit local admin rights with Privilege Manager, and manage credentials and monitor privileged user sessions with Secret Server.

Let’s talk about how a comprehensive PAM solution can secure your critical assets from insider threats.

IT security should be easy. We'll show you how